Ubuntu22.04搭建Kubernetes1.27集群

准备工作

本文环境通过VirtualBox搭建Ubuntu集群,操作步骤见

Windows11下VirtualBox搭建Ubuntu

环境说明

1. 硬件环境

- 虚拟机硬件规格:Master(2CPU 4G 40GB) Node(2CPU 2G 40GB)

- 虚拟机数量: 3台

- 虚拟机操作系统:ubuntu-22.04.5-live-server-amd64.iso

| 操作系统 | 主机名 | IP |

|---|---|---|

| ubuntu-22.04.5 | k8s-master | 192.168.112.174 |

| ubuntu-22.04.5 | k8s-node1 | 192.168.112.175 |

| ubuntu-22.04.5 | k8s-node2 | 192.168.112.176 |

2. 关闭swap分区

- 永久关闭:编辑/etc/fstab文件,将swap分区的UUID注释掉,重启后生效。

- 临时关闭:执行swapoff -a命令。

3. 关闭SELinux

- 永久关闭:编辑/etc/selinux/config文件,将SELINUX=enforcing改为SELINUX=disabled,重启后生效。

- 临时关闭:执行setenforce 0命令。

4. 网络配置

- 配置host

# 修改机器名称

vim /etc/hostname

# 修改hosts

vim /etc/hosts

# 添加以下内容,这里的ip地址和hostname根据实际情况填写

192.168.112.174 k8s-master

192.168.112.175 k8s-node1

192.168.112.176 k8s-node2

- 转发 IPv4 并让 iptables 看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

lsmod | grep br_netfilter #验证br_netfilter模块

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

安装containerd

- 下载1.7.13

wget https://github.com/containerd/containerd/releases/download/v1.7.13/cri-containerd-cni-1.7.13-linux-amd64.tar.gz

sudo tar -zxvf cri-containerd-cni-1.7.13-linux-amd64.tar.gz -C /

containerd -v

- 配置containerd

#创建配置目录

sudo mkdir /etc/containerd

#生成默认配置文件

containerd config default

containerd config default | sudo tee /etc/containerd/config.toml

#编辑默认配置文件

sudo vi /etc/containerd/config.toml

#修改以下配置

#sandbox_image 值为 registry.aliyuncs.com/google_containers/pause:3.9

#————————————————————————————————

#SystemdCgroup 为 true

#————————————————————————————————

#若需要使用ip地址的私有镜像仓库,可修改以下配置

# [plugins."io.containerd.grpc.v1.cri".registry]

# config_path = "/etc/containerd/certs.d"

# 创建配置文件 /etc/containerd/certs.d/<registry-name>/hosts.toml,并添加以下内容:

#server = "http://10.0.0.10:5000"

#

#[host."10.0.0.10:5000"]

# capabilities = ["pull", "resolve", "push"]

# skip_verify = true

#配置Docker Hub镜像加速

mkdir -p /etc/containerd/certs.d/docker.io

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://dockerproxy.cn"]

capabilities = ["pull", "resolve"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull", "resolve"]

EOF

#配置registry.k8s.io镜像加速

mkdir -p /etc/containerd/certs.d/registry.k8s.io

tee /etc/containerd/certs.d/registry.k8s.io/hosts.toml << 'EOF'

server = "https://registry.k8s.io"

[host."https://k8s.m.daocloud.io"]

capabilities = ["pull", "resolve", "push"]

EOF

#启动containerd

sudo systemctl enable --now containerd

安装Kubernetes

- 安装kubeadm、kubectl、kubelet

#修改镜像地址

echo "deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

#更新包,安装软件并固定版本

sudo apt-get update

#apt-get update若报错xxx kubernetes-xenial InRelease' is not signed.

#可执行curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -重新添加密钥

sudo apt-get install -y kubelet=1.27.1-00 kubeadm=1.27.1-00 kubectl=1.27.1-00

#固定版本

sudo apt-mark hold kubeadm kubectl

Master节点初始化集群

- 初始化集群

#生成默认配置文件

kubeadm config print init-defaults > kubeadm.yaml

#修改kubeadm.yaml文件

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.112.174 # master节点ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master # 可选修改当前节点名字

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 换源

kind: ClusterConfiguration

kubernetesVersion: 1.27.1 # 修改自己安装的版本号,kubelet --version可以查看

networking:

podSubnet: 10.244.0.0/16 # 添加子网

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

# 添加驱动:使用systemd作为cgroup驱动。

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

---

#初始化集群

sudo kubeadm init --config kubeadm.yaml --v=6

#如果执行初始化指令报错了一次,请执行reset指令重新再来否则会提示k8s所需的配置文件已经存在无法初始化

sudo kubeadm reset --cert-dir string

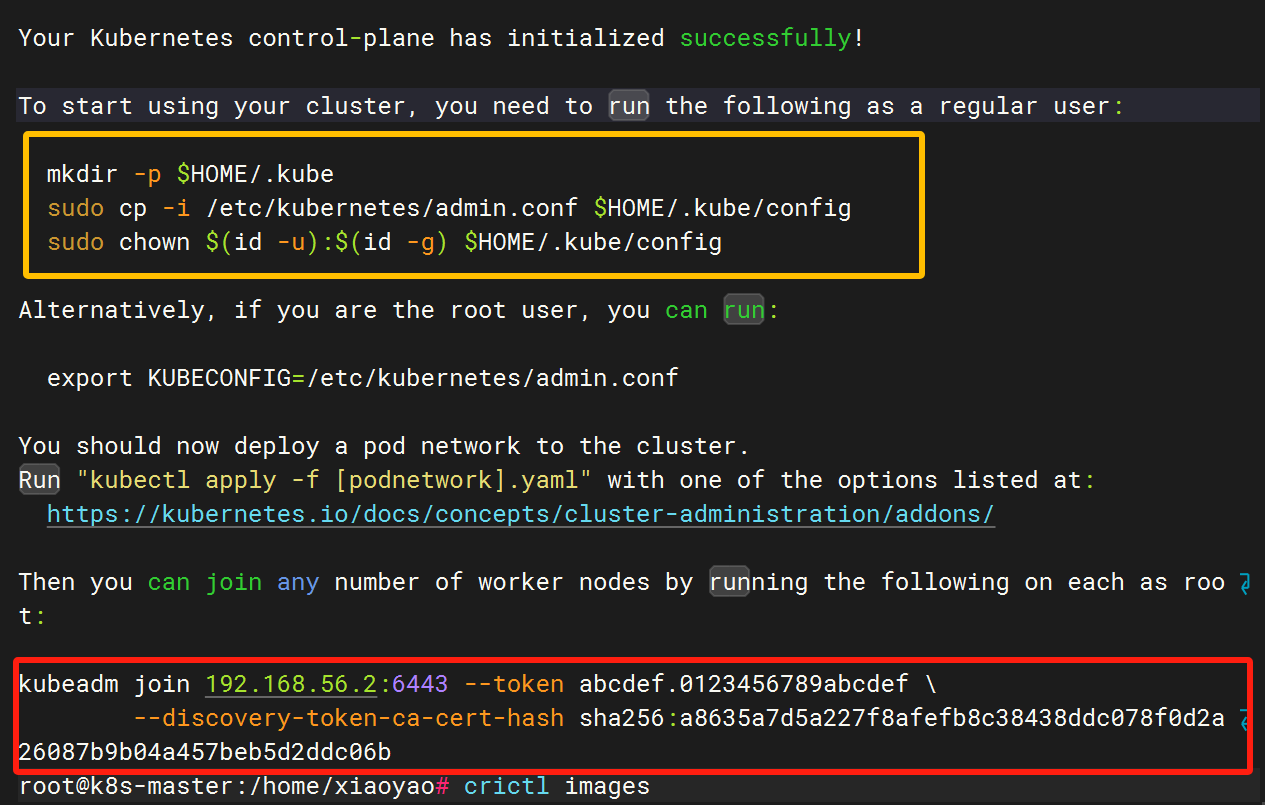

- 初始化成功后如下图所示,在本机执行黄色框内命令,子节点执行红色框内命令加入集群

Worker节点加入集群

- 本文采用Virtual Box搭建虚拟机环境,直接复制两个Worker Ubuntu虚拟机,修改hostname及静态ip

- 执行kubeadm join xxx命令加入集群,若遇到localhost:8080 was refused报错,需要从Master节点同步kube配置文件到子节点,操作命令如下

# 复制admin.conf,请在master节点服务器上执行此命令:

scp /etc/kubernetes/admin.conf 192.168.112.175:/etc/kubernetes/admin.conf

# 然后在该工作节点上配置环境变量:

# 设置kubeconfig文件

export KUBECONFIG=/etc/kubernetes/admin.conf

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

# 接下来,工作节点执行kubectl就正常了

- 若遇到Port 10250 is in use报错,在kubeadm join命令末尾加入一行参数 –ignore-preflight-errors=Port-10250

- 运行kubectl get nodes查看节点状态

root@k8s-node2:~# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 171m v1.27.1

k8s-node1 Ready <none> 119m v1.27.1

k8s-node2 Ready <none> 36s v1.27.1

root@k8s-node2:~# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true","reason":""}

scheduler Healthy ok

controller-manager Healthy ok

root@k8s-node2:~# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5d78c9869d-72nqj 1/1 Running 1 (131m ago) 174m

coredns-5d78c9869d-j28vx 1/1 Running 1 (131m ago) 174m

etcd-k8s-master 1/1 Running 1 (131m ago) 174m

kube-apiserver-k8s-master 1/1 Running 1 (131m ago) 174m

kube-controller-manager-k8s-master 1/1 Running 1 (131m ago) 174m

kube-proxy-b4g7j 1/1 Running 1 (131m ago) 174m

kube-proxy-snk4w 1/1 Running 0 3m56s

kube-proxy-t54c5 1/1 Running 0 122m

kube-scheduler-k8s-master 1/1 Running 1 (131m ago) 174m

root@k8s-node2:~# kubectl get ns

NAME STATUS AGE

default Active 175m

kube-node-lease Active 175m

kube-public Active 175m

kube-system Active 175m

CNI搭建

- 本文提供两种安装方式,基本场景下使用flannel/calico就行,本文因需验证应用场景,选用Kube-OVN作为CNI插件。

1. Flannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

#将文件的docker.io镜像修改为docker.m.daocloud.io镜像,例如

image: docker.m.daocloud.io/flannel/flannel:v0.25.4

kubectl apply -f kube-flannel.yml

sudo su

# root账号下,在/etc/cni/net.d/ 写入文件

cat <<EOL > /etc/cni/net.d/10-flannel.conflist

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

EOL

#重启服务

sudo systemctl restart containerd

sudo systemctl restart kubelet

1. Kube-OVN

- 根据Kube-OVN官方文档进行安装